Multi-edge server-assisted dynamic federated learning with an optimized floating aggregation point

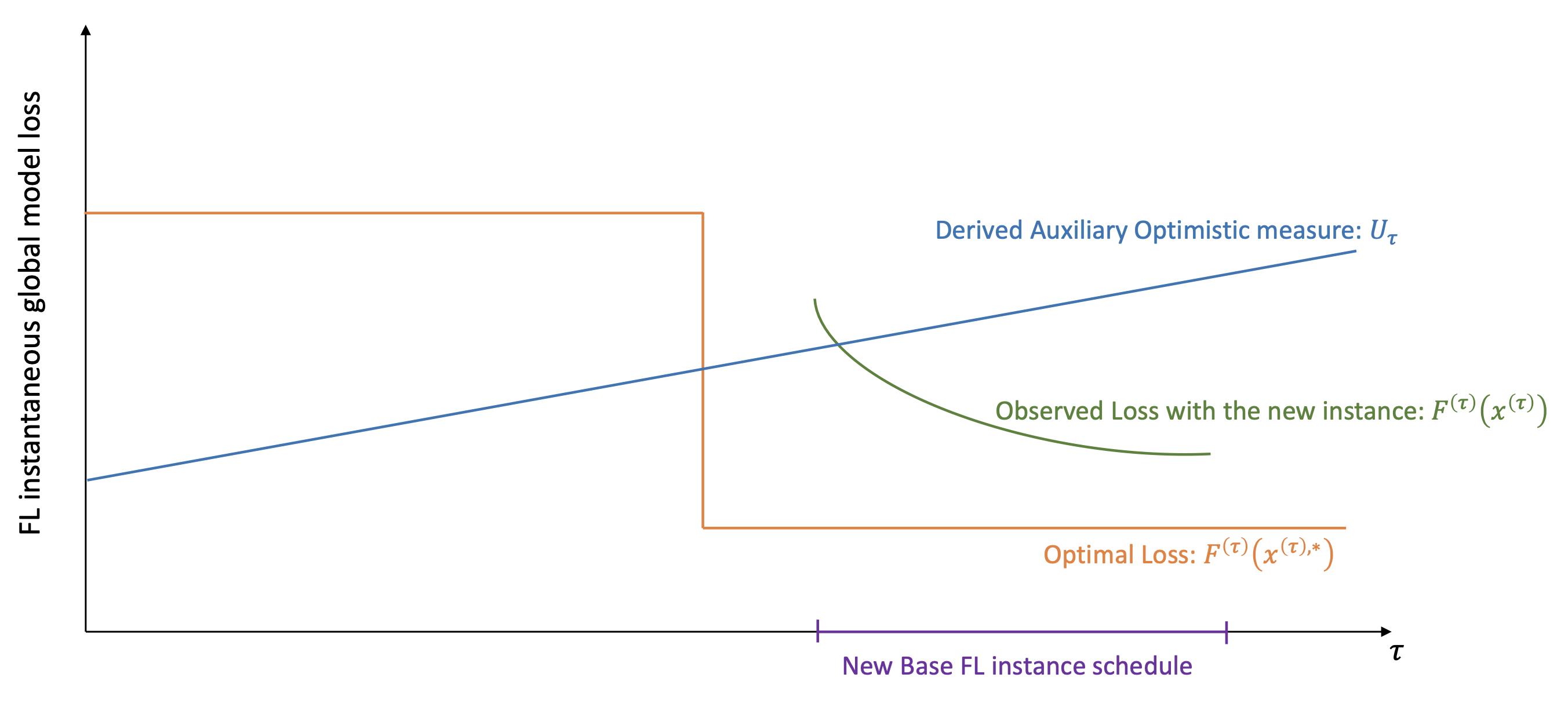

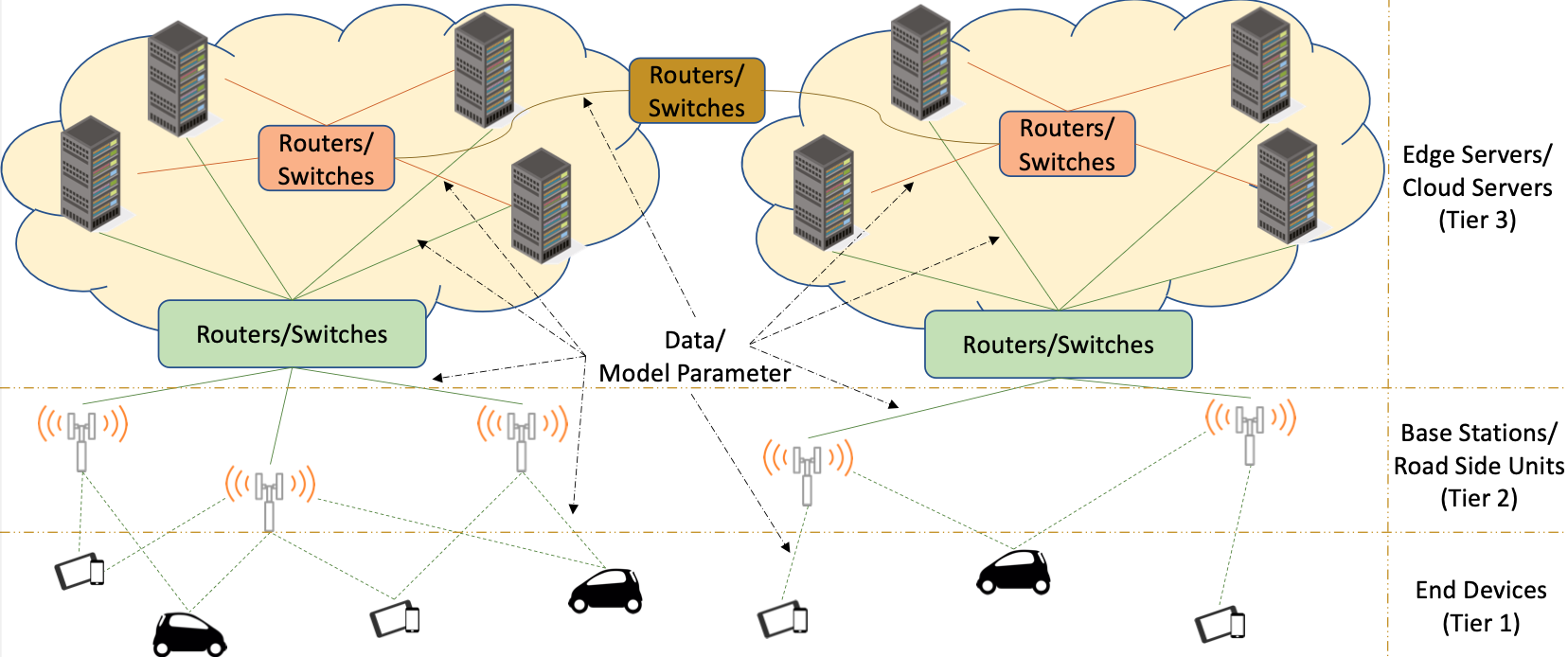

Cooperative Edge-Assisted Dynamic Federated Learning (CE-FL) introduces a distributed machine learning (ML) architecture where data is collected at end devices and model training is performed cooperatively at both end devices and edge servers. Data is offloaded from the end devices to edge servers through base stations. CE-FL features a floating aggregation point where local models from devices and servers are aggregated at a varying edge server each training round, adapting to changes in data distribution and user mobility. It accounts for network heterogeneity in communication, computation, and proximity, and operates in a dynamic environment with online data variations affecting ML model performance. We model the CE-FL process and provide an analytical convergence analysis of its ML model training.

Bhargav Ganguly*, Seyyedali Hosseinalipour*, Kwang Taik Kim, Christopher G Brinton, Vaneet Aggarwal, David J Love, Mung Chiang

IEEE/ACM Transactions on Networking, 2023